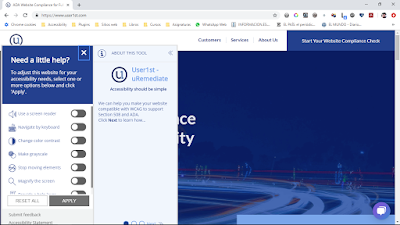

Automated tools for testing accessibility have evolved to a point where you don’t have to look hard to find dozens of examples of thorough and reliable tools that test for adherence to Web Content Accessibility Guidelines. There are browser extensions, command-line tools, tools that can be integrated with continuous integration systems…

At every level of integration, these tools provide a good starting point for testing, point to patterns of errors that humans can then look out for, and can catch issues before they go to production.

Consider the following scenarios:

- A content editor removes the contents of an h2 tag, unaware that the tag remains, causing confusion for screen reader users.

- The theme of a website contains a ‘skip to content’ link at the top, that linked to a ‘content’ div out of the box. The developer renamed that div, breaking the link. Now keyboard-only users have no way to skip to content, and have to tab through every navigation link.

- A designer prefers placeholder text to a field label, unaware that this may display an unlabeled field to those using a screen reader.

These issues (and many more) are very easy to miss — and they are very common. If you’re not using an automated testing tool, your site probably has similar issues.

I spent several weeks comparing these three tools in preparation for a presentation at DrupalCon Nashville. Each has a browser extension and an array of options for testing on the command line with continuous integration possibilities. They are all effective for testing a wide range of issues. The differences mainly lie in the user experience and in the scope of the tools.

An Important Caveat

Before you leap to the conclusion that an automated accessibility tool is going to do all of the work that is required to make your website accessible, we need to talk about what these tools cannot do. If your aim is to provide a truly accessible experience for all users, a deeper understanding of the issues and the intent behind the guidelines is required.

The advantages and limitations of automated tools: a few examples

Automated Tools Will Suffice for the following:

- Does the image have alt text?

- Does the form field have a label and description?

- Does the content have headings?

- Is the HTML valid?

- Does the application UI follow WCAG guideline X?

Human Intervention Is Required for the following:

- Is the alt text accurate given the context?

- Is the description easy to understand?

- Do the headings represent the correct hierarchy?

- Is the HTML semantic?

- Does the application UI behave as expected?

While automated tools can discover many critical issues, there are even more issues that need human analysis. If there is a vague or misleading alt tag, that is just as bad from a usability standpoint as a missing alt tag, and an automated tool won’t catch it. You need someone to go through the site with a sharp and trained eye.

In the end, to fully test for accessibility issues, a combination of automated and manual testing is required. Here is a great article on key factors in making your website accessible. Automated testing tools provide a good starting point for testing, point to patterns of errors that humans can then look out for, and can catch issues before they go to production.

Whether you are a developer, a QA tester, or a site owner, and whatever your technical skill level is, automated accessibility testing tools can and should become an indispensable part of your testing toolkit.